Almost two years ago, Hashem Al-Ghaili, a Yemeni molecular biologist, science communicator, and filmmaker, posted a nine-minute video on his social media introducing EctoLife, a supposed company of artificial wombs that, according to him, would allow gestating 30,000 babies a year through a sophisticated genetic engineering system that parents could monitor from their mobile phones as if their child were a tamagotchi. The impact of the ad was so huge that Al-Ghaili had to clarify days later that EctoLife was not a real industry, but only "a concept." An idea, however, perfectly feasible. "It's not science fiction", he warned. "The facility does not exist at this moment; but it is 100% based on science and over 50 years of research."

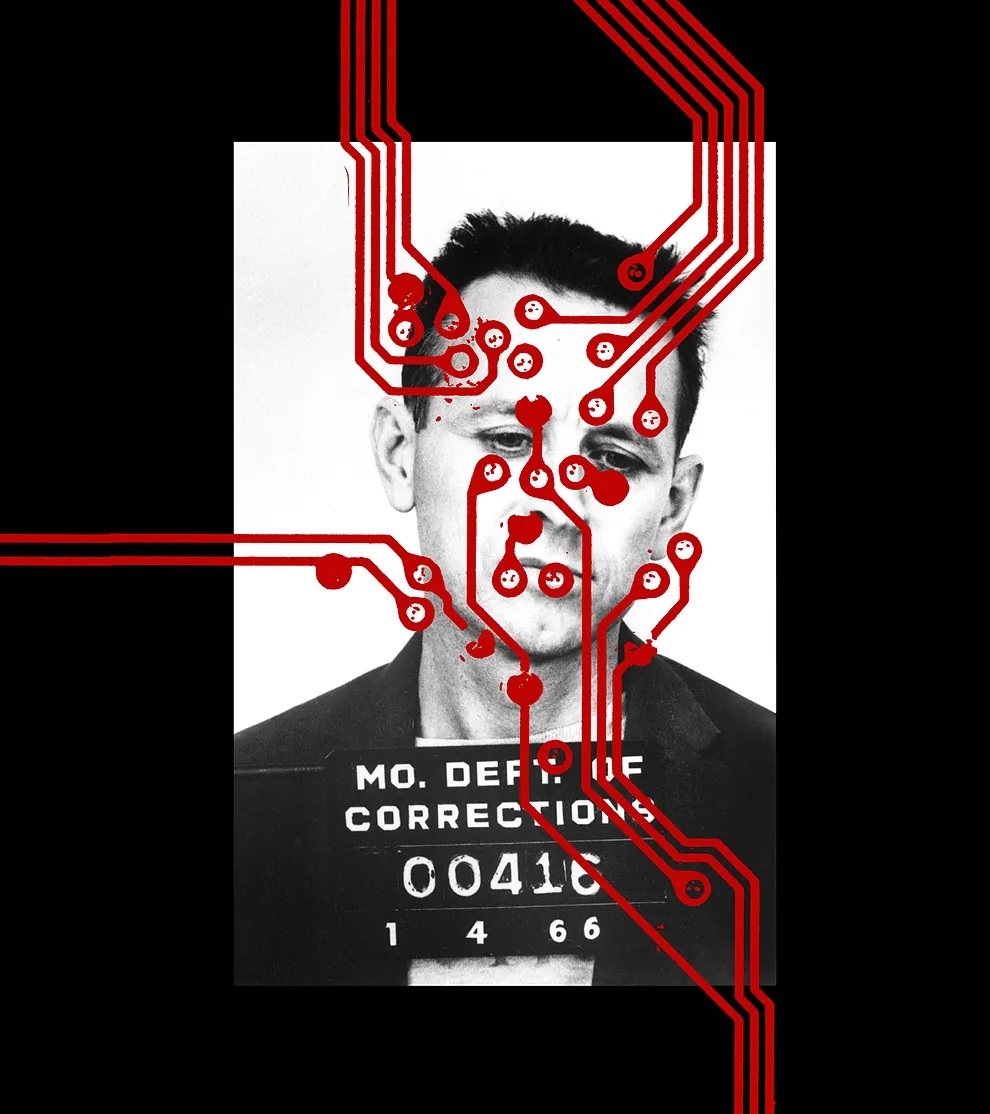

Months later, the phenomenon repeated with BrainBridge, his particular machine for transplanting heads like transplanting a kidney. And now he's back with Cognify. "Welcome to the prison of the future," warns the latest video clip by Al-Ghaili. "An installation designed to treat criminals as patients."

The Cognify project envisions a future of prisons without walls or bars where inmates could serve their sentences in just a few minutes through the implantation of artificial memories in their brains. "These complex, vivid, and realistic memories are created in real-time using content generated by artificial intelligence," explains its ideologist. "Depending on the severity of the crime and the sentence, memories could be tailored to the rehabilitation needs of each inmate. The prisoner can choose between spending decades in a cell or fast-track rehabilitation through the artificial implantation of memories."

The initiative, reminiscent of a Denis Villeneuve movie, does not seem very likely, at least in the short term. However, it has stirred the debate on the use of technology and advances in artificial intelligence in the application of justice and the future of correctional institutions. In Spain, several systems are already in place, whether to expedite prison permit procedures or monitor the radicalization of Islamist prisoners. However, none have gone as far as Cognify... yet.

"It's a crazy and dangerous proposal," warns José Manuel Muñoz, a researcher at the International Center for Neuroscience and Ethics (CINET). "Crazy because it is not based on real science but rather on science fiction ideas we have seen in movies like A Clockwork Orange or Minority Report. And dangerous because, despite not being scientifically serious, the proposal defends and spreads the alarming idea that correcting antisocial behavior is something that should be handled through brain modification or reprogramming."

Al-Ghaili seems unfazed by the criticisms. "The science backing it already exists, but ethical boundaries prevent it from becoming a reality," he complained a few months ago in the tech magazine Wired.

The instruction manual for his invention starts with a high-resolution brain scanner that would create a detailed map of the neural pathways of each patient to detect the regions responsible for memory, reasoning, and logical thinking. Once identified, the Cognify device is placed around the prisoner's head like a motorcycle helmet, and personalized "synthetic memories" are applied based on the committed crime, brain structure, and psychological profile.

"Violent offenders could experience memories designed to evoke empathy and remorse. See their crime from the victim's perspective, feel their pain and suffering firsthand," explains the video by the Yemeni biologist, whose machine could also induce emotional states like remorse or regret, crucial for rehabilitation. "Artificial memories could cover a wide range of crimes, such as domestic violence, hate crimes, embezzlement, insider trading, theft, and fraud."

"Inmates will be able to choose between spending decades in a cell or rehabilitate through the artificial implantation of memories"

From an ethical standpoint, José Manuel Muñoz explains that this project is a new version of a well-known concept: "moral enhancement." "It is an idea that, although it has its defenders, is highly questionable and has been criticized by broad sectors and numerous international specialists in bioethics," he explains. "Modifying a person's brain, even with their consent, to morally enhance them assumes, among other things, that everything depends on the brain as if we were machines, as well as a medicalization of criminal behavior as if it were an easily curable disease with a pill. In this case, a brain chip."

Al-Ghaili argues that his idea would allow a better understanding of the criminal mind and determine the best approach to address crimes. Additionally, it would revolutionize the criminal justice system worldwide by significantly reducing the need for long-term incarceration, the prison population, and associated costs.

At the end of last month, the British government released the first 1,700 prisoners out of the more than 5,000 it plans to release in the coming months to address prison overcrowding. Spain is also among the European countries with the highest number of inmates, but the figures have remained stable in recent years. In 2021, at least eight EU countries suffered from overcrowding in their cells.

"Its proponents argue that neurotechnology could be the key to reducing recidivism and facilitating the reintegration of offenders into society. But is it really possible to change a person's nature by altering their thoughts through a brain chip? And, more importantly, is it legal and ethical?" asked Damián Tuset, Researcher in Public International Law and Artificial Intelligence, in an article titled Brain Chip for Inmates: a Prison Inside the Head just a few weeks ago.

Tuset now admits that, from a technical standpoint, the idea of Cognify could be a reality in the medium term, but the moral and legal doubts raised by such an idea are still unresolved. "A measure like that would have very interesting benefits from a theoretical point of view, but in practice, it would directly affect a person's dignity," he explains. "Who decides which memories are implanted or with what intensity? Who controls the technology? What prevents us from using that same technology to control other sectors of the population under the pretext of security or order? Today, a measure like this would be considered high risk."

The European Law on Artificial Intelligence, approved at the end of last year, contemplates this. The regulation agreed upon by the States and the Eurochamber establishes a risk scale to classify the different artificial intelligence systems that may enter the market. A kind of technology traffic light. Below the "unacceptable risk" systems, those that pose a clear threat to fundamental rights and will therefore be directly banned in Europe, a level of "high risk" is established that includes, among others, any application related to the administration of justice.

Just a few days ago, the Council of Europe also published specific recommendations to ensure that the use of AI by prison services respects human rights. "Technologies should not replace prison staff in their daily work and interaction with offenders, but rather assist them in that task," the text says. According to its guidelines, any decision based on the use of AI that may affect human rights must be subject to human review and effective complaint mechanisms. However, the scenario is not that simple.

"The complexity of reality surpasses the formal guarantees of the norm," summarizes María del Puerto Solar, a jurist from Penitentiary Institutions. "And despite recommendations stating that, in risk assessment tools, the human evaluative factor cannot disappear, I believe that in practice, it is very complex to implement due to the technological bias that prevails. Reality is always more complex than what a machine can measure, but... who dares to contradict the machine today?"

"Will we ever see a prison without bars in Spain?"

"Justice involves a lot of humanity, but we are losing it... I believe that with individuals deprived of liberty, we must work from their autonomy and towards achieving their accountability. And this idea goes in the opposite direction: it is closer to lobotomizing inmates. In Spain, there are already several initiatives using artificial intelligence, but this is a step further."

VioGén system was implemented, which estimates the risk levels for women victims of gender-based violence. And in 2018, VeriPol was created, a computer application that uses artificial intelligence to estimate the probability that a report filed by an individual for a case of robbery with violence is false."

"From a legal guarantee perspective, these are highly problematic tools," warns Puerto Solar. "They are not intelligent tools, as they will be in a few years. Right now, they only exploit data, making risk predictions from a statistical point of view, which creates problems for effective judicial protection. If a prison permit is denied based on a risk percentage, it is very difficult for me to defend myself. I don't know what data went into that machine or what factors it considered. More attention is paid to the data than to the person, and in the end, it's like fighting against the elements. How do you defend yourself against something as opaque as an algorithm?"

Last year, a pilot test was launched in Catalonia to analyze through the images recorded by prison surveillance cameras and artificial intelligence the facial expressions and body language of inmates to prevent risks of escape, riots, or drug introduction into prison. Since 2009, the RisCanvi risk assessment tool has also been operating in the Catalan prison system, estimating the probabilities of a prisoner breaking their permits, behaving violently in prison, or reoffending once released.

"Beware of the temptation to believe that just because we are using AI, we are closer to success," warns José Manuel Muñoz. "Predicting with precision whether a specific prisoner, by name, will reoffend or not is impossible, no matter how much AI we use. It is a very trendy technology, and I agree that it can bring us benefits, but there is too much hype around it. We run the risk of it getting out of hand, to the point of using it for everything and legitimizing intolerable practices just because AI has been used."

The Constitutional Court of Colombia issued a pioneering ruling last month to regulate the use of AI in the judiciary following the controversial use of ChatGPT by a judge in the country to support a decision in a tutelage process.

Last summer, a team of specialists in Law, Mathematics, and Statistics from the University of Valencia (UV) and the Polytechnic University (UPV) warned about the risks of three predictive tools used in Spain in police management and prison classification, both for citizens' fundamental rights and potential discrimination against vulnerable groups.

Their study, led by UV's Criminal Law professor Lucía Martínez Garay, thoroughly analyzed the functioning of the VioGén, VeriPol, and RisCanvi tools. Their conclusion was that all three share general shortcomings in their adaptation to the legal framework on data protection. "All these applications comply with the regulations, not because they are rigorously designed, but because the current framework is not particularly demanding," explains Martínez Garay, who criticizes the lack of transparency and information on the operation of these algorithms in Spain, making it impossible to detect potential discriminatory biases or threats to citizens' rights.

In the United States, an algorithm called Compas has been used for years to assess the risk of recidivism among the prison population for granting prison permits, setting bail, or granting parole. The goal was to streamline the process and help judges make decisions based on objective data. However, it was soon discovered that the system had a bias that discriminated against African American prisoners.

"It is the absolute subordination of human decisions to those of a computer program, which affects the destiny of human beings, whether inmates or victims," explains the CINET researcher. "These irresponsible practices should be eradicated and certainly not emulated elsewhere."

How do these tools work in Spain? The closest example to the American Compas is RisCanvi, a system developed in response to the social alarm generated in the 2000s by the recidivism of several sex offenders in Catalonia. Today, its software analyzes a list of up to 43 factors that may include the inmate's age, the date of their first offense, the violence of their acts, the duration of their sentence, family history, educational level, economic resources, psychiatric diagnosis, prison record, among others. The algorithm considers all these factors and returns a risk level in five different modalities: self-directed violence, violence within prison, breaking of sentences, violent recidivism, or general recidivism.

For many years, the opacity of the Catalan Administration prevented understanding its operation, but now it is audited annually. The data of its application is known, but the algorithm's inner workings remain a mystery.

"In general, these tools have a problem: false positives," explains Lucía Martínez Garay. "More than 90% of inmates assessed by RisCanvi as low risk of recidivism never reoffend, which is a success of the algorithm. But only 20% of those classified as high risk commit a violent act again. This means that the system fails with the other 80%," explains the Criminal Law professor. "The Administration will tell you that the system works well because recidivism has decreased, and it's true, but many people affected by this risk assessment are in a defenseless situation, marked by an algorithm whose functioning we do not fully understand and that no one dares to contradict because it takes a lot of courage to oppose the machine."

-Will we never be able to trust the judgment of an algorithm?

-Algorithms are here to stay, and it is more likely that they will spread before they stop being used. The problem is that when artificial intelligence is added and they learn on their own, the opacity will be even greater.

-Can you imagine a future where those prisoners at higher risk of recidivism can be rehabilitated with a chip in their brain?

-It is difficult to predict the evolution in this area because technologically everything is advancing faster than we think. Technically, what can be done, implanting memories, I have no doubt... The question is whether we will allow it or not. And we must carefully weigh its advantages and disadvantages when the time comes to make the decision. I want to believe that it is impossible for us to walk around in 10 years with brain chip headsets as if they were mobile phones.

-And in 20 years?

-In 20 years, I dare not say.